Sense of touch

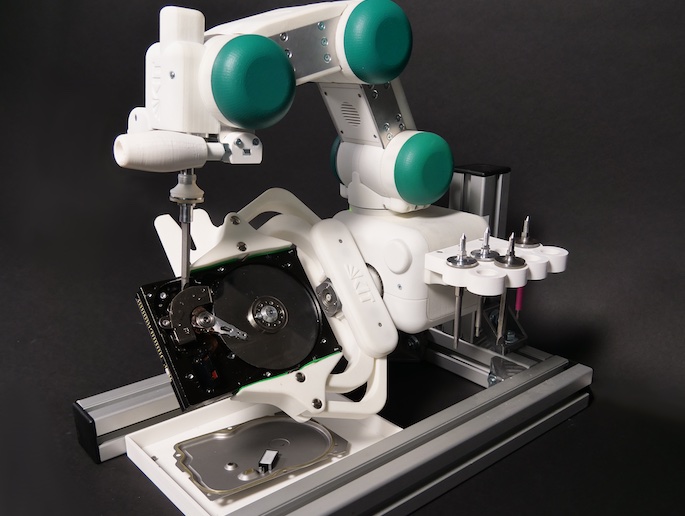

The interpretation of stimulatory input from our environment creates images of our environment on which we can base our actions, thoughts and automatic responses. This amazingly complex process can easily be taken for granted until the loss of a sense occurs. The aptly named SENSEMAKER project formulated its main aim as the creation of electronic devices with sensory information input from different modalities. Process of the information then would render the user with a representation of their environment. Like the brain, able to extract the relevant information from the sensory representations, the software platforms would be able to selectively process information from modalities. Project partners used the senses vision, audition and the haptic, or sense of touch, with an internal motor command facility. As part of the biological component of the project, partners at Trinity College, Dublin in Ireland chose to investigate the tactile sense and its mode of interpretation in the brain. How the central nervous system actually processes sensory information would be a crucial feature in the design of software for the project. It is known, for example, that the visual sense operates on a dual basis, one for spatial processing and the other for recognition. The team used both behavioural parameters and functional magnetic resonance imaging (fMRI) to investigate responses to unfamiliar stimuli. Results from the MRI scans showed that haptic information occupied a shared network of areas in the cortex. Overall however, spatial information is dealt with by the occipito-parietal pathway but recognition is processed by the occipito-temporal pathway. Behavioural tests supported these conclusions and showed that the two functions are task-dependent and do not interfere with each other. Interestingly, vision was found to have an effect on tactile spatial and object recognition and when visual information is reduced, there is an enhancement of behavioural performance by a combination of the two senses. In conjunction with the Electronic Vision Group at the Kirchhoff-Institut für Physik in the University of Heidelberg, the team from Dublin developed the Virtual Haptic Display (VHD) device. The innovative feature in respect of the predecessor model is that it does not rely on passive input but needs active exploration. The image is displayed either as a whole or part by means of a controllable aperture. Applications of this technology occupy a wide range. Patients with impaired sensory perception stand to benefit as well as learning aids and environmental analysis and depiction in hazardous situations. For now and the future, there are obvious applications in cognitive robotics, autonomous systems and distributed intelligence.