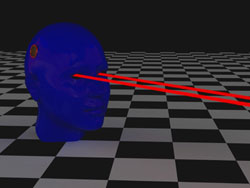

Visualising speech technology

Having precise knowledge of human anatomy functions in both physiological and pathological conditions is vital in several medical fields, biomedical engineering being one such example. Of particular importance are the 3D features of functional anatomy but they are not easily represented in a coherent manner. Consequently, visualisation and manipulation of anatomical objects is an ambitious way to try out innovative methods. In light of this, the MULTISENSE project was concerned with the visualisation of, and integration with,data associated with musculo-skeletal structures through multi-modal and multi-sensorial interfaces. A user-friendly visualisation and interaction environment has been created which presents all of the information through a set of representation-interaction pairs likened to medical imaging modalities obtained from biomedical professional uses. By creating a new representation and interaction paradigm for virtual medical objects using multi-modal and multi-sensorial interfaces, a speech framework was developed. It can connect with any speech recogniser supporting context free grammar and any speech synthesis and it is also compatible with low-level speech technology components. An additional feature is a speech utterance detector which can determine the start and end point of a spoken utterance and can be used to help understand textual contents of the utterance.