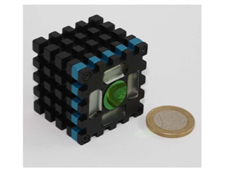

Project Success Stories - Time-of-flight cameras set to take off

Thomas, a quadriplegic adolescent, can laboriously move his head just millimetres from side to side. It is the only movement that he is able to make. With new 3D time-of-flight (TOF) hardware and software developed by European researchers, though, that tiny movement is enough to control a low-cost input device for a computer, suddenly unlocking the world of communication for Thomas. This fictional scenario illustrates the power and significance of new breakthrough technology recently created by an EU-funded research project. The 'Action recognition and tracking based on time-of-flight sensors' (ARTTS) project sought to improve the underlying technology and develop new algorithms, the codes that control the sensors, for action recognition and object tracking. TOF cameras are a hot, emerging technology. 'Microsoft recently bought the Israeli TOF company 3DV Systems because they want to use the technology in their next-generation console,' explains Professor Erhardt Barth, deputy director of the Institute for Neuro- and Bioinformatics at the University of Lübeck, Germany, and coordinator of the ARTTS project. 'The main thrust of our research was human-computer interaction using TOF.' The principle behind TOF is simple. The sensor measures the time it takes light to travel from the camera to an object and back ― the time-of-flight ― and uses this data to calculate the distance to the object. It is a 3D camera such as stereovision and laser scanners, but cheaper and more adaptable than either of the latter. Faster, leaner, cheaper To achieve that aim, the researchers had to embark on a highly ambitious programme of work. They had to dramatically improve TOF cameras over anything which currently exists, by increasing the depth resolution and the quality of the signal while reducing size, power consumption and cost. 'We had to show that you could develop sensors… small enough that you can imagine people putting them into webcams, computers and even mobile devices,' stresses Prof. Barth. 'What makes this new camera different is that it really is a lot smaller and more power efficient than what existed before. It only requires a USB port for power and it still retains the properties of the other machines that are much bigger and heavier and energy consuming.' But smaller, cheaper and efficient was not enough, the camera had to be accurate too. 'Accuracy is another important point,' notes Prof. Barth. 'This camera uses active illumination, so there are some infrared diodes in the camera, but what makes this illumination special is that it is modulated: The brightness changes very quickly over time, and so the accuracy depends on the frequency ― how fast this illumination is changing. We managed to build a 60-megahertz light source. It changes 60 million times a second, which makes the camera much more accurate in the near range.' Other cameras are optimised for the far range, 1 metre to 7 metre and beyond, but the ARTTS camera is more accurate within a metre, which is the relevant distance for using gestures with smart phones. 'The iPhone made people familiar with using gestures, and so the next step would be to have interaction without touch, and that's where we come in.' 'So, actually I think the ARTTS camera developed by partner CSEM is the main competition to what Microsoft now has. I think ours is better, in fact, but it needs more work to become a mass market product,' Prof. Barth emphasises. Dual camera The team also developed a second camera that combines both a low-resolution TOF sensor and a high definition television (HDTV) quality image sensor. 'Partner SMI thinks this sensor will provide better functionality for security and health care, providing video links to elderly people, and alerting health-care workers if there is a problem with a patient, for example,' reveals Prof. Barth. For example, it could track people as they move through a room and recognise their actions. He is very upbeat about this camera because even a low-resolution TOF sensor provides very usable distance data, whereas a low-resolution image sensor is less useful. 'I think this type of dual camera will be ideal for most environments and devices,' he notes. 'Essentially, it just uses a beam splitter that sends the infrared light to the TOF sensor and the visible light to the image sensor. Beam splitters are a mature, low-cost technology already widely used in camcorders.' If the hardware is state-of-the-art, software is essential for function. In this area university teams from Denmark, Romania and Germany worked on three problems. The first was generic, simply seeking to dramatically improve the quality of the signal from the TOF sensors, and the team used some very ingenious solutions. For example, Barth's group realised that light intensity and distance are not independent values but are related to each other by the shading constraint. If the reflectance properties of the observed object are known, the quality of the range maps can be dramatically improved, objectively and subjectively, by imposing the shading constraint. Other solutions improved the signal further. The second software objective was to develop an algorithm for object tracking. 'I spent a lot of time before trying to track human features like the eyes and the nose and it is pretty hard,' reveals Prof. Barth, “especially if you need to work in places like a car, where the light changes all the time.' Subtle sensing However, with the TOF sensors there are two measures: a distance map and a light intensity map. Combined with the distance map, the intensity map is good enough to do useful applications. For example, by using this combination of information, ARTTS was able to develop various object-tracking algorithms which can track people, because the distance provides one measure that is corroborated by another from the light-intensity signal. This is an important basis for the team's other work. Once the system can track an object reliably, it is ready to learn gesture recognition, which was the main goal of the ARTTS project from the beginning. And it turns out that the ARTTS system is capable of recognising quite subtle gestures. While there are measurement errors for each pixel that give you a distance value, there are a lot of pixels. This translates into a lot of values that average out in the aggregate, which dramatically increases sensitivity. In its current version, the system can even control a slideshow presentation using hand gestures to point, change slides, go back and more. The applications of these technologies are potentially endless. After an intensely competitive application process, the project received EUR 400 000 of funding from the German government to create a start-up which will provide both technology and services. Customers can buy a hardware and software platform to develop their own applications, or they can hire the new firm TOF-GT to develop the application for them. The team is developing an advertising application for the Vienna train station. It consists of a 12-metre-wide high-definition screen and a number of TOF cameras. The cameras will enable commuters to interact with the screen as it plays advertisements. It could also be used to conduct surveys, or handle customer information. The team is also considering a smaller version for use in stores or shopping centres. It 'is' brain surgery The team is also collaborating with neurosurgeons and a medical instrument company to develop gestures for the operating room. Neurosurgeons use a vast amount of information in a variety of formats ― images, vital signs, read-outs from a large number of instruments. 'Right now, a surgeon has to put down his tool, change the data view and then proceed, but we will develop a system that will make changes in response to specific gestures. It is not a research project, it will become a new application.' It is a taste of things to come for the technology. Currently, the widest application of TOF sensors is the dairy industry. They are used in automatic milking machines to connect to the cow's udder. But the potential TOF sensor applications are literally limitless and now, thanks to the ARTTS project, they are small, affordable, efficient and optimised for object tracking and gesture recognition. ARTTS was funded under the IST strand of the EU's Sixth Framework Programme for research. Image caption: ARTTS camera: compact and powered via USB